◎ Eye of Agamotto

◎ Eye of Agamotto

As known, Node.js

is a popular javascript runtime based on V8 and libuv,

with many third-party packages managed by npm.

How to enable nodejs in openresty, so that we could reuse its rich ecosystem?

Check https://github.com/kingluo/lua-resty-ffi/tree/main/examples/nodejs for source code.

lua-resty-ffi

https://github.com/kingluo/lua-resty-ffi

lua-resty-ffi provides an efficient and generic API to do hybrid programming in openresty with mainstream languages (Go, Python, Java, Rust, Node.js, etc.).

Features:

- nonblocking, in coroutine way

- simple but extensible interface, supports any C ABI compliant language

- once and for all, no need to write C/Lua codes to do coupling anymore

- high performance, faster than unix domain socket way

- generic loader library for python/java/nodejs

- any serialization message format you like

Just like Python, we could implement a loader library to embed nodejs in each nginx worker process.

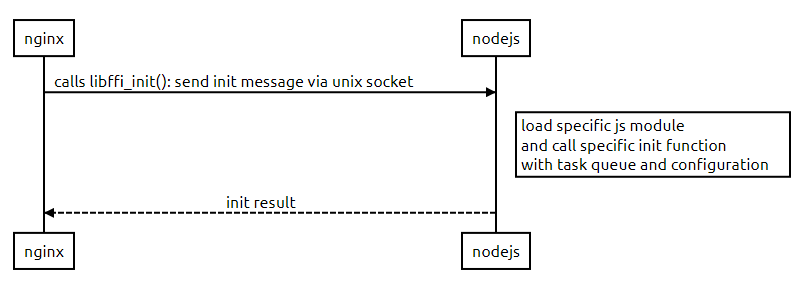

Runtime initialization

In lua-resty-ffi, libffi_init() is defined in language specific shared library,

used to initialize a ffi runtime

(you could have multiple runtimes upon the same shared library, but with different configurations).

One instance of runtime is normally one poll thread and other threads.

How to implement libffi_init() in nodejs?

nodejs allows only one instance in one process, i.e. node::Environment,

although you could have multiple instances of v8, i.e. v8::Isolate.

So all ffi runtimes share this nodejs instance. Unlike python, nodejs does not provide C

API to interact with nodejs when it is running, so we have to figure out some way to do init.

I choose unix domain socket.

The main thread of nodejs creates an unix socket server when libffi_init() was called for the first time.

When nginx calls libffi_init() each time, it sends an init message, which contains the task queue

pointer address and configuration string to the unix socket server. When the nodejs receives the init

message, it would load the specific js module to do the real init, and finally,

it returns the result to nginx.

Note that the unix socket is only used to do ffi runtime init, but not request-response flow between nginx and ffi runtime later, which uses lua-resty-ffi IPC there.

◎ init flow

◎ init flow

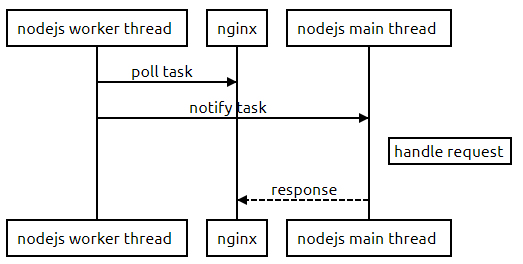

Threading Model

Node.js is single threading. The main thread uses libuv to provide event-driven

nonblocking I/O processing. But nodejs supports

worker thread,

which is usually used to do CPU-intensive or other blocking tasks.

Polling tasks from nginx is blocking, so we can take advantage of one worker thread to do

polling. Once the polling thread gets one task, it passes the task to the main thread via

parentPort,

which is MessagePort allowing communication with the parent thread.

◎ request flow

◎ request flow

Wrap lua-resty-ffi API

Unlike python cffi, nodejs lacks a good ffi library.

The most famous one is node-ffi-napi, but I don’t know how to use it to convert a long integer into pointer, which is exactly what I need for task queue pointer conversion. And this project said that it has a non-trivial call overhead, so it is not suitable for busy requests flows.

Luckily, in nodejs, you could develop an addon module to couple with C at ease, based on Node-API.

Node-API (formerly N-API) is an API for building native Addons. It is independent from the underlying JavaScript runtime (for example, V8) and is maintained as part of Node.js itself. This API will be Application Binary Interface (ABI) stable across versions of Node.js.

The function prototype is flexible, you can get arbitrary numbers and types of input arguments from javascript, and return any type of output argument to javascript.

For example, I wrap ngx_http_lua_ffi_get_req() into get_req() function of Javascript, which returns

a string to represent the request from nginx.

|

|

How to use it?

This is the simplest example:

demo/echo.js

|

|

Specify the entry module and function in lua and use it:

|

|

Conclusion

With lua-resty-ffi, you could use your favorite mainstream programming language, e.g. Go, Java, Python, Rust, or Node.js, to do development in Openresty/Nginx, so that you could enjoy their rich ecosystem directly.

Welcome to discuss and like my github repo: