Back in 2019, when I work as individual postgresql/nginx consultant. Many clients ask me if they could do hybrid programming in nginx.

They said, nginx/openresty is powerful and high performance, but the most significant drawback is it lacks of ecosystem of mainstream programming languages, especially when they have to interact with popular frameworks, e.g. kafka, consul, k8s, etc. The C/Lua ecosystem is slim, even if they bite the bullet and write down C/Lua modules, they face the awkward fact that most frameworks do not provide C/Lua API.

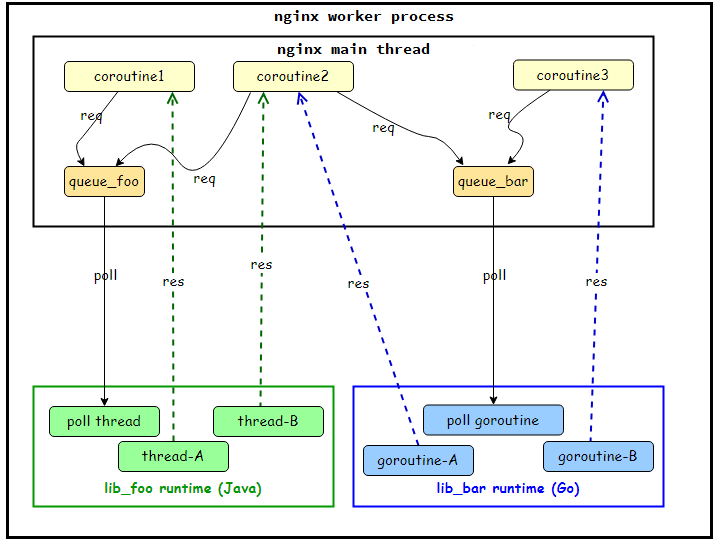

As known, nginx is multi-proceesses and single threading. In the main thread, it does nonblocking async event processing. Openresty wraps it in Lua API, combines the coroutine and epoll into coscoket concept.

Meanwhile, each mainstream programming language has its own threading model. So the problem is, how to couple with them in coroutine way? For example, when I call the function in Rust (maybe runs in a thread, or maybe in tokio async task, whatever), how to make it nonblocking in Lua land, and make the call/return in yield/resume way?

Of course, the traditional way is to spawn an individual process to run Rust, and at the lua side, use unix domain socket to do the communication, as known as proxy model. But that way is low-efficient (either development or runtime). You need to encode/decode the messsage in TLV (type-length-value) format, and, you need to maintain nginx and proxy process separately, as well as failover and load-balance topics.

Could I make it simple like FFI call but with high performance? The answer is yes, I created lua-resty-ffi.

lua-resty-ffi

https://github.com/kingluo/lua-resty-ffi

lua-resty-ffi provides an efficient and generic API to do hybrid programming in openresty with mainstream languages (Go, Python, Java, Rust, etc.).

Features:

- nonblcking, in coroutine way

- simple but extensible interface, supports any C ABI compliant language

- once and for all, no need to write C/Lua codes to do coupling anymore

- high performance, faster than unix domain socket way

- generic loader library for python/java

- any serialization message format you like

Architecture

The combination of library and configuration would init a new runtime, which represents some threads or goroutines to do jobs.

lua-resty-ffi has a high performance IPC mechanism, based on request-response model.

The request from lua is appended to a queue. This queue is protected by pthread mutex.

The language runtime polls this queue, if it’s empty, then wait on the queue via pthread_cond_wait().

In busy scenario, almost all enqueue/dequeue happens in userspace, without futex system calls.

lua-resty-ffi makes full use of nginx nonblocking event loop to dispatch the response from the language runtime. The response would be injected into the global done queue of nginx, and notify the nginx main thread via eventfd to handle it. In main thread, the response handler would setup the return value and resume the coroutine waiting on the response.

As known, linux eventfd is high performance. It’s just an accumulated counter, so multiple responses would be folded into one event.

Both request and response data are exchanged in userspace.

What it looks like?

Take python as example.

|

|

Calls it like normal function call in your lua coroutine:

|

|

Benchmark

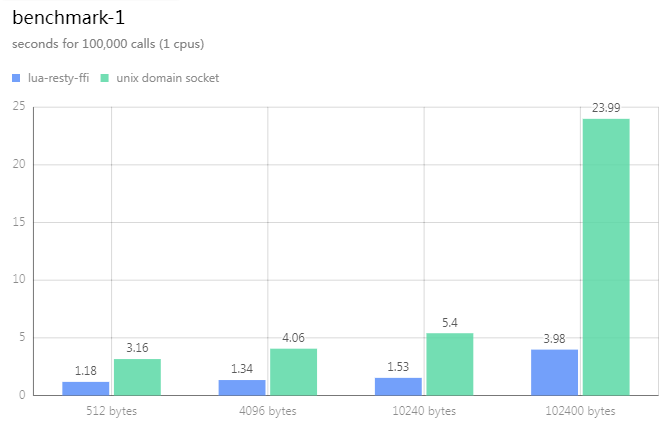

Send 100,000 requests, in length of 512B, 4096B, 10KB, 100KB respectively. The result is in seconds, lower is better.

You could see that lua-resty-ffi is faster than unix domain socket, and the difference is proportional to the length.

Check benchmark in detail.

Develop GRPC client in Rust

Let’s use lua-resty-ffi to do some useful stuff.

In k8s, GRPC is standard RPC. How to make GRPC calls in lua?

Let’s use Rust to develop a simple but complete GRPC client for lua, covering unary, client/server/bidirectional streaming calls, as well as TLS/MTLS.

In this example, you could also see the high development efficiency using lua-resty-ffi.

Check https://github.com/kingluo/lua-resty-ffi/tree/main/examples/rust for code.

tonic

tonic is a gRPC over HTTP/2 implementation focused on high performance, interoperability, and flexibility. This library was created to have first class support of async/await and to act as a core building block for production systems written in Rust.

tonic is my favourite rust library.

It’s based on hyper and tokio.

Everything works in async/await way.

Use low-level APIs

Normal steps to develop grpc client:

- write a

.protofile - compiles it into high-level API for use, via

build.rs - calls generated API

For example:

helloworld.proto

|

|

client.rs

|

|

But it’s not suitable for lua, because it’s expected to call arbitrary protobuf interface

and does not need to compile .proto file.

So we have to look at low-level API provided by tonic.

Let’s check the generated file:

helloworld.rs

|

|

message encode/decode

You could see that the request structure is marked with ::prost::Message attribute.

And it uses tonic::codec::ProstCodec::default() to do the encoding.

This part could be done in lua using lua-protobuf.

It could do encode/decode based on the .proto file, just like JSON encode/decode.

Check grpc_client.lua for detail.

But wait, if you do encoding in lua, then how to tell rust to bypass it?

The answer is customized codec, instead of ProstCodec, so that we could transfer the byte array “AS IS”.

|

|

For encode, we put the original byte array encoded by lua into the request.

For decode, we copy the byte array to lua and let lua decode it later.

Then, we could use unary directly.

connection management

Each GRPC request is called upon the connection object (interestingly, the connection in tonic has retry mechanism). I use hashmap to store connections, and assign each connection a unique string, so that the lua side could index it. In Lua, you could explicitly close it or let the lua gc to handle the close.

Each connection could be configured with TLS/MTLS info, e.g. CA certificate, private key.

message format of request and response

Now, let’s look at the message format between lua and rust, which is most important part.

request

|

|

Since we need to handle different kinds of functions, e.g. create a connection, GRPC unary call, etc. So it’s obviously we need a structure to describe all information.

cmd: the command indicator, e.g.NEW_CONNECTIONkey: depends oncmd, could be connection URL, connection id or streaming id.host: HTTP2 host header, used for TLSca,cert,priv_key: TLS related stuffpath: full-qualified GRPC method string, e.g./helloworld.Greeter/SayHellopayload: the based64 and protobuf encoded requset byte array by lua

Note that, all optional fields use Option<>.

JSON is high performance in lua, so no need to worry about the overhead.

Check my blog post for benchmark between JSON and protobuf if you are interested in this part.

response

This part is more straightforward than the request. Because for each request type, you could expect only one kind of result.

For example, for NEW_CONNECTION, it returns connection id string;

for UNARY, it returns the protobuf encoded response from the network.

So, returning the malloc() byte array is ok, no need to wrap it in JSON.

GRPC streaming

Under the hood, no matter which type the call is,

unary request,

client-side streaming,

server-side streaming or

bidirectional streaming,

tonic encapsulates the request and response into

Stream object.

So we just need to establish the bridge between lua and Stream, then we could support GRPC streaming in lua.

send direction

We create a channel pair, wrap the receiver part into request Stream.

|

|

Then the call upon the stream would be transfer to the network directly.

recv direction

We create an async task to handle the response retrieval.

|

|

close the send direction

Thanks to the channel characteristic, when we drop the send part of the channel pair, the receiver part would be closed and in turn close the send direction of GRPC stream.

Final look

Unary call:

|

|

Streaming calls:

|

|

Benchmark

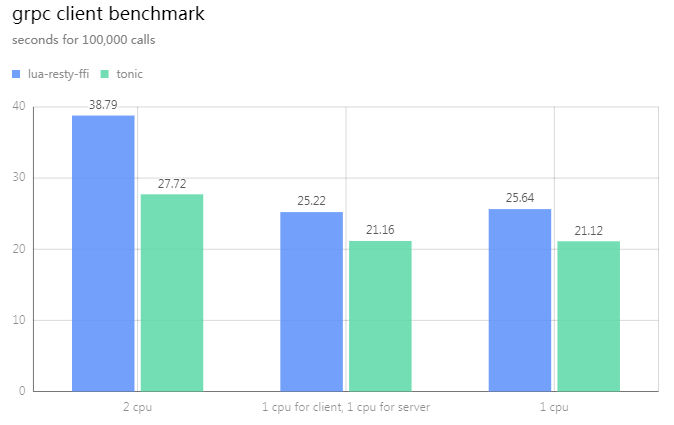

The most important question is, since we wrap the tonic, so how much is the overhead?

I use AWS EC2 t2.medium (Ubuntu 22.04) to do the benchmark.

Send 100,000 calls, use lua-resty-ffi and tonic helloworld client example respectively.

The result is in seconds, lower is better.

You could see that the overhead is 20% to 30%, depending the CPU affinity. No too bad, right?

Conclusion

With lua-resty-ffi, you could use your favourite mainstream programming language, e.g. Go, Java, Python or Rust, to do development in Openresty/Nginx, so that you could enjoy their rich ecosystem directly.

I just use 400 code lines (including lua and rust) to implement a complete GRPC client in OpenResty. And this is just an example, you could use lua-resty-ffi to do anything you want!

Welcome to discuss and like my github repo: