◎ Scared timeline

◎ Scared timeline

TL;DR

In openresty, using ngx.location.capture(), we can achieve high-performance asynchronous processing of upstream responses.

As we all know, due to the design limit, nginx header/body filters are synchronous and you should not perform blocking operations such as blocking system calls, decrypting, decompressing, or forwarding responses to other servers for further processing.

In contrast, envoy supports asynchronous HTTP filters on the response path.

However, there are some use cases where the response headers and body from the upstream server must be processed asynchronously before being sent downstream, such as by requesting a Vault server to verify the digest of the body, or by scanning the content on a dedicated server for confidentiality reasons.

We should access the upstream in advance before the filter phases, that is the rewrite phase, the access stage, or the content phase.

There are two ways to access the upstream asynchronously:

- lua-resty-http cosocket-based HTTP client, pure lua

ngx.location.capture() openresty API, based on nginx subrequest

Let’s evaluate them and choose a better approach.

conf/nginx.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

|

worker_processes auto;

error_log logs/error.log info;

worker_rlimit_nofile 20480;

events {

worker_connections 10620;

}

lua {}

http {

lua_package_path "/usr/local/openresty/luajit/share/luajit-2.1.0-beta3/?.lua;/usr/local/share/lua/5.1/?.lua;/usr/local/share/lua/5.1/?/init.lua;/usr/local/openresty/luajit/share/lua/5.1/?.lua;/usr/local/openresty/luajit/share/lua/5.1/?/init.lua;;";

underscores_in_headers on;

resolver 127.0.0.53 ipv6=on;

keepalive_requests 10000;

upstream fake_upstream {

server 127.0.0.1:8080;

keepalive 320;

keepalive_requests 10000;

keepalive_timeout 60s;

}

# fake upstream server

server {

listen 0.0.0.0:8080 default_server reuseport;

listen [::]:8080 default_server reuseport;

location /get {

return 200 "hello\n";

}

}

server {

listen 0.0.0.0:80 default_server reuseport;

listen [::]:80 default_server reuseport;

# proxy_pass location, internal use only

location /pass {

internal;

# remove the subrequest URL prefix

rewrite ^/pass/(.*)$ /$1 break;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_pass http://fake_upstream;

}

location / {

content_by_lua_block {

-- async filter via subrequest

if ngx.var.arg_subreq then

local res = ngx.location.capture("/pass" .. ngx.var.uri)

ngx.status = res.status

for k,v in pairs(res.header) do

ngx.header[k] = v

end

-- process body asynchronously here

local body = res.body

-- ...

ngx.print(body)

ngx.exit(ngx.HTTP_OK)

-- async filter via lua-resty-http

elseif ngx.var.arg_resty then

local httpc = require("resty.http").new()

local res, _ = httpc:request_uri("http://127.0.0.1:8080" .. ngx.var.uri)

for k,v in pairs(res.headers) do

ngx.header[k] = v

end

local body = res.body

-- process body asynchronously here

local body = res.body

-- ...

ngx.print(body)

ngx.exit(ngx.HTTP_OK)

end

-- fall-through

ngx.exec("/pass" .. ngx.var.uri)

}

}

}

}

|

The key point here is that we leverage the internal location (/pass) as a transfer point. When the request is processed at the match-all root location /, it sends a subrequest (ngx.location.capture("/pass" .. ngx.var.uri)) to upstream, /pass removes the prefix and uses the original URL (rewrite ^/pass/(.*)$ /$1 break;), which makes it strictly compatible with the nginx native way.

Note that here I’m using 127.0.0.1 as the only upstream IP, but you can use balancer_by_lua_block to enable dynamic IP set. So one /pass location is enough to access any upstream node, and you can even use DNS names like lua-resty-http, as long as you resolve the DNS names in any async phase (e.g. access phase) before the balancer phase.

Let’s test it:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

mkdir -p /opt/subreq/{conf,logs}

cd /opt/subreq/

# create and edit conf/nginx.conf

nginx -p $PWD -c conf/nginx.conf -g 'daemon off; error_log /dev/stderr info;'

# check if it works

curl http://localhost/get -v

* processing: http://localhost/get

* Trying [::1]:80...

* Connected to localhost (::1) port 80

> GET /get HTTP/1.1

> Host: localhost

> User-Agent: curl/8.3.0-DEV

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: openresty/1.25.1.1

< Date: Thu, 04 Jan 2024 08:56:04 GMT

< Content-Type: text/plain

< Content-Length: 6

< Connection: keep-alive

<

hello

curl http://localhost/get?subreq=1

hello

curl http://localhost/get?resty=1

hello

|

I use k6 to test latency and throughput.

subreq.js

1

2

3

4

5

6

7

|

import http from 'k6/http';

import { sleep } from 'k6';

export default function () {

http.get(`${__ENV.URL}`);

sleep(`${__ENV.SLEEP}`);

}

|

Test commands:

1

2

3

4

5

6

7

8

|

# fall-through, i.e. normal path to access upstream

k6 run -u 1 -d 30s -e 'URL=http://dev/get' -e 'SLEEP=0' -q subreq.js

# subrequest

k6 run -u 1 -d 30s -e 'URL=http://dev/get?subreq=1' -e 'SLEEP=0' -q subreq.js

# lua-resty-http

k6 run -u 1 -d 30s -e 'URL=http://dev/get?resty=1' -e 'SLEEP=0' -q subreq.js

|

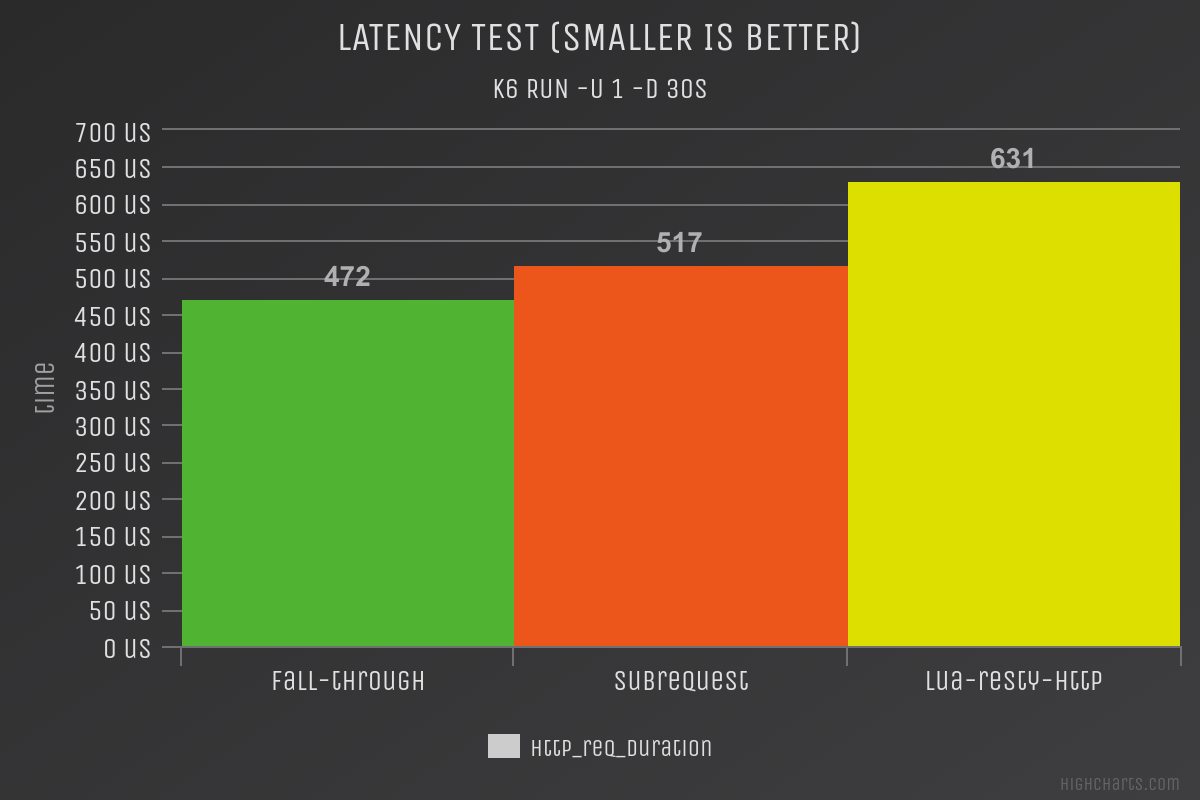

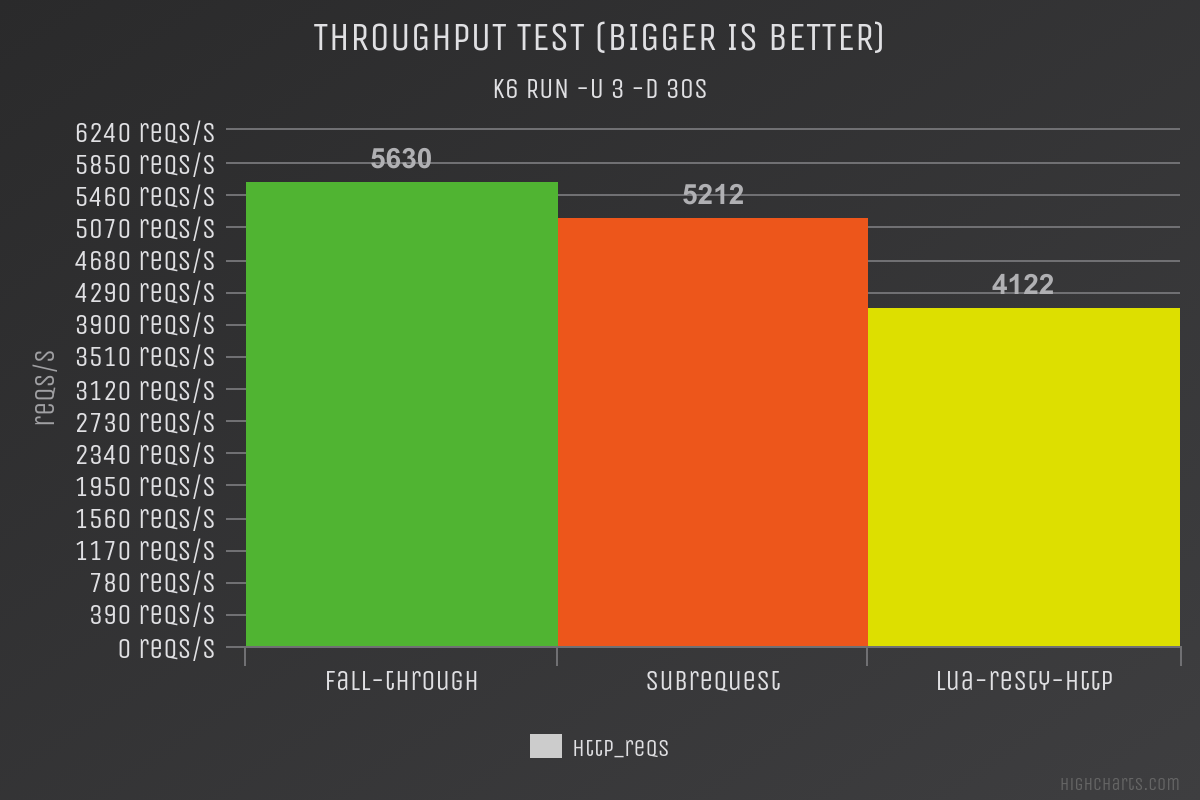

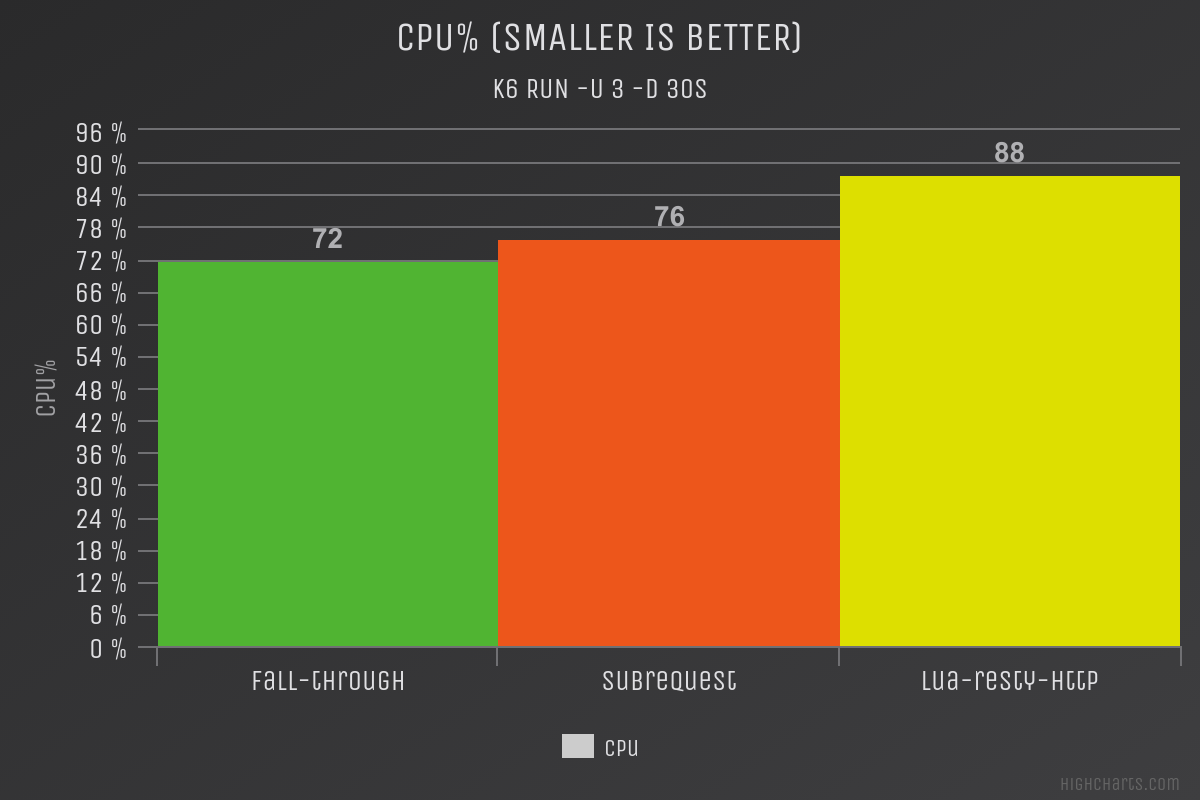

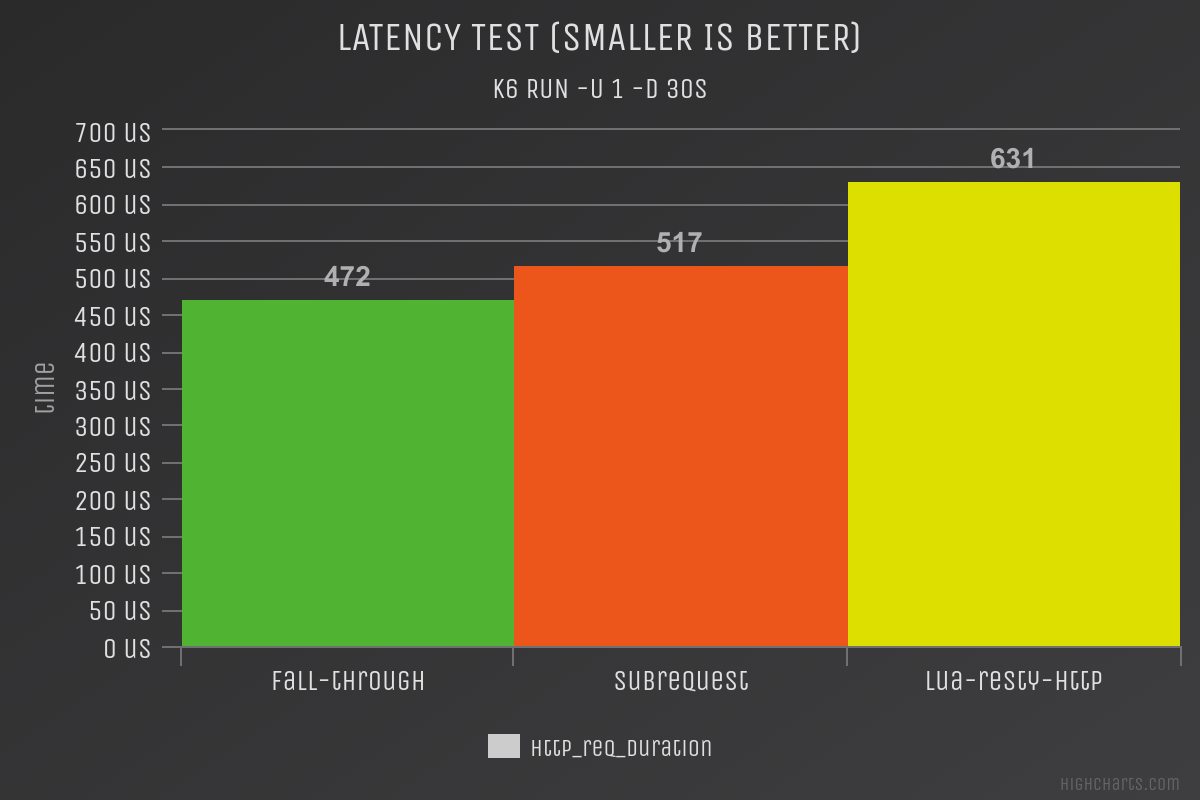

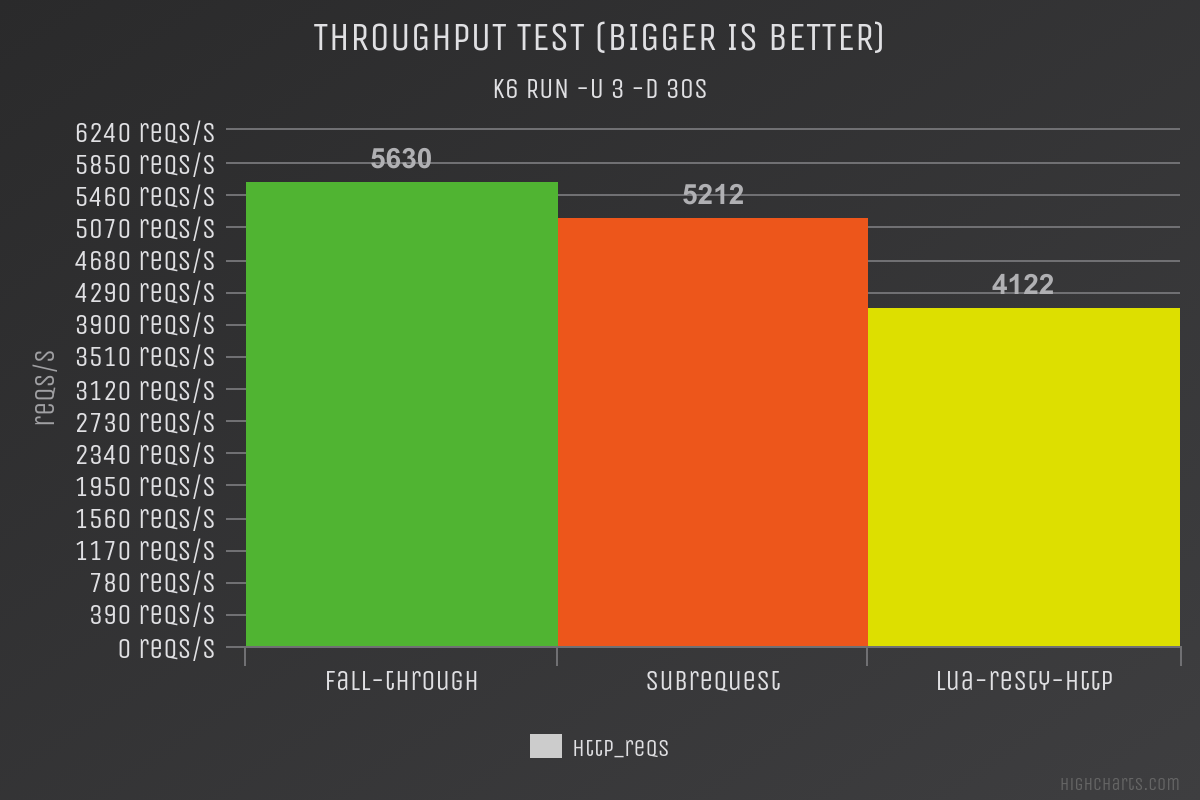

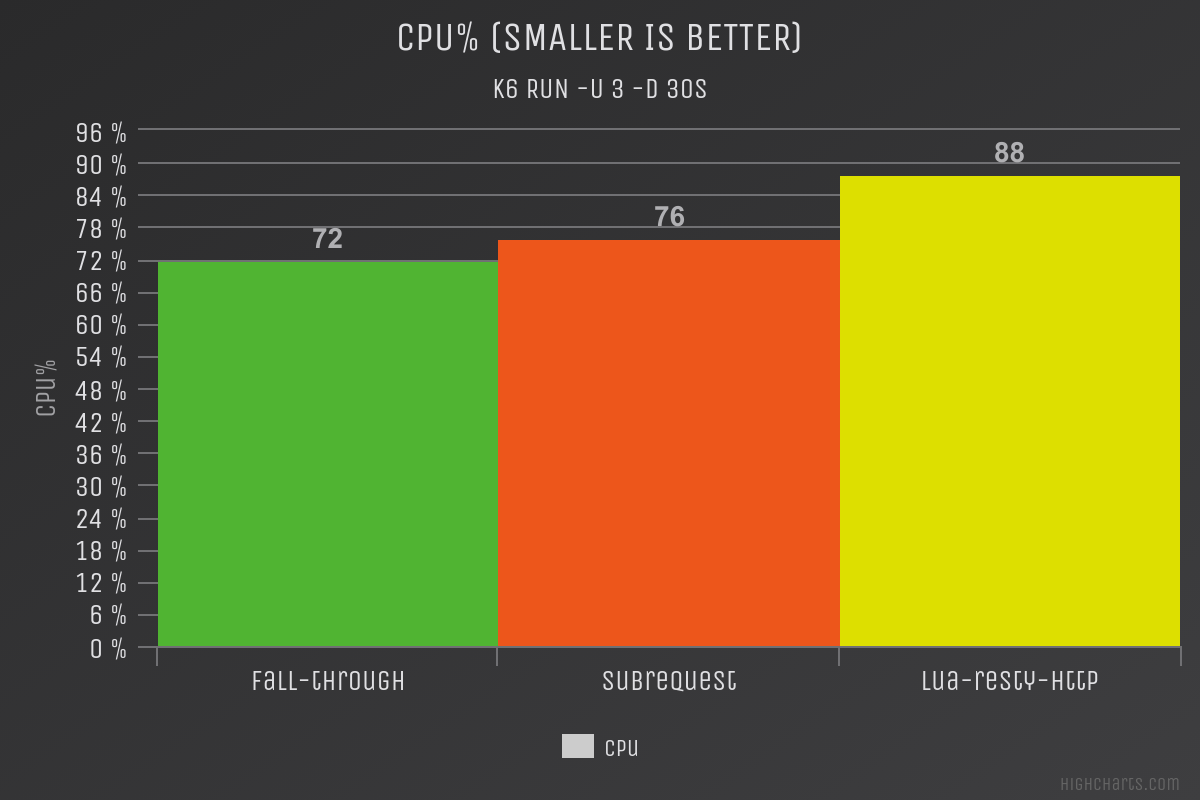

You can see that the method of subrequests is almost the same as the native method of nginx, whether it is latency, throughput or CPU.

However, lua-resty-http is much worse than the subrequest in terms of latency, throughput, and CPU. Mainly because it requires pure Lua code to handle HTTP stuff that can’t be fully compiled by the JIT.

◎ latency

◎ latency

◎ throughput

◎ throughput

◎ cpu

◎ cpu

- lua-resty-http

- Pros:

- No need for location configuration in nginx.conf

- Cons:

- poor performance

- Only supports HTTP/1.1

ngx.location.capture()

- Pros:

- Supports all upstream protocols of nginx

- Very good performance with almost zero overhead

- Cons:

- Requires subtle location configuration in nginx.conf

If you need to use an asynchronous body filter written in Lua code, ngx.location.capture() is the best choice.

◎ Scared timeline

◎ Scared timeline ◎ latency

◎ latency ◎ throughput

◎ throughput ◎ cpu

◎ cpu